Did you know you can run serverless in Kubernetes?

What is serverless?

Serverless is a trending technology in software development and modern architectures.

It is a cloud-native development model that abstracts away all the infrastructural plumbing underneath the code, allowing developers to build and run applications without having to care about servers at all. This way, developers can focus solely on their code.

Don’t get confused, there are still servers in serverless, but these are abstracted away from app development. All the work of provisioning, maintaining, scaling and updating the server infrastructure is handled by the cloud provider.

Developers package their code for deployment, and the implementation details are hidden from the user. The team or person writing the code doesn’t have to deal with the infrastructure underneath.

Once deployed, serverless apps respond to demand and automatically scale up and down as needed. Since serverless offerings usually work with an on-demand model, it is a very cost-efficient way to implement microservices. This means that when a serverless function is sitting idle, it doesn’t generate any costs.

Serverless architectures are at the extreme end of the microservice spectrum, being even more fine-grained and loosely coupled. Serverless functions complement more traditional microservice and virtual machine (VM)- based approaches and regular third-party cloud services for event queueing, messaging, databases, and more.

Kubernetes and Serverless

Why is it important?

Kubernetes is an open source platform for automating deployment, scaling and managing containerized applications.

It is very flexible and portable, which allows apps to be able to run on a laptop, in a public cloud, in an on premises data center, or in a managed Kubernetes cluster.

Every developer wants to be able to focus more on the details of the code rather than the infrastructure where that code runs. And serverless is a perfect way of meeting that need.

However, organizations often use a variety of services from different vendors, together with existing workloads in their on-premises data center. And it is better for developers to have a single serverless experience across all platforms, it saves time, resources and the work of having to learn new tools.

Other platforms that allow you to run serverless applications (deployed as single functions or running inside containers), come with the drawbacks of vendor lock-in, constraint in the size of the application binary/artifacts and cold start performance.

Luckily, there is no need to choose between Serverless and Kubernetes. There are several open-source platforms available today that will allow you to deploy efficient and stable Serverless applications on Kubernetes, eliminating the above mentioned issues.

How to run Serverless on Kubernetes?

Here are some projects you can use to run your Serverless functions natively on Kubernetes:

Open Faas

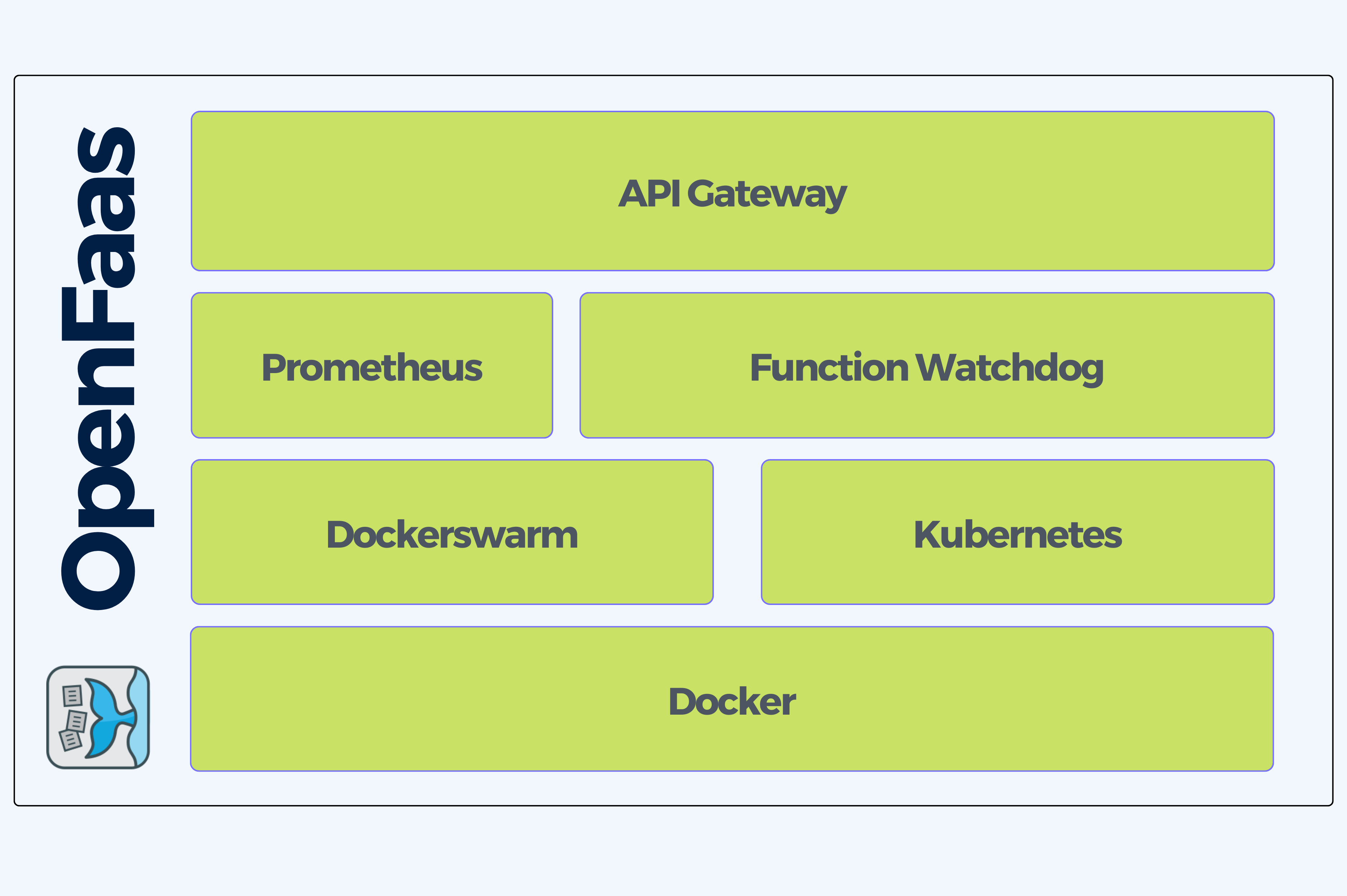

OpenFaas is an open source serverless platform that supports serverless functions deployed on Docker Swarm or Kubernetes and has a wide and active community of contributors. It is a containerized application which means it is highly portable.

It allows you to use any language inside the containerized functions to write your code. The setup is simple, offering a default configuration that is also customizable. There is no limit on runtime for functions.

OpenFaas is managed using the faas-cli. For the deployment of OpenFaas to Kubernetes you can use a Helm chart or raw resource YAML spec.

Prometheus allows you to expose function metrics and the default auto scaling behavior (which can be swapped out for HPA – Horizontal Pod Autoscaler).

Architecture:

-

- API gateway: Uses Prometheus to handle routing requests to functions and the collection of metrics.

- Watchdog: A lightweight Golang based web server that acts as a generic entry point for functions. It receives an HTTP request and invokes a function by forwarding the requests via standard in and awaiting response via standard out.

- Queue Worker: Works with a NATs queue to provide asynchronous invocation of functions with a configurable callback URL.

- API gateway: Uses Prometheus to handle routing requests to functions and the collection of metrics.

Pros:

- Lots of prebuilt triggers and runtimes available

- Useful metrics available out of the box

- Detailed performance test instructions

- Popular and has an active community

Cons:

- Lengthy cold-start time for some programming languages.

Fission

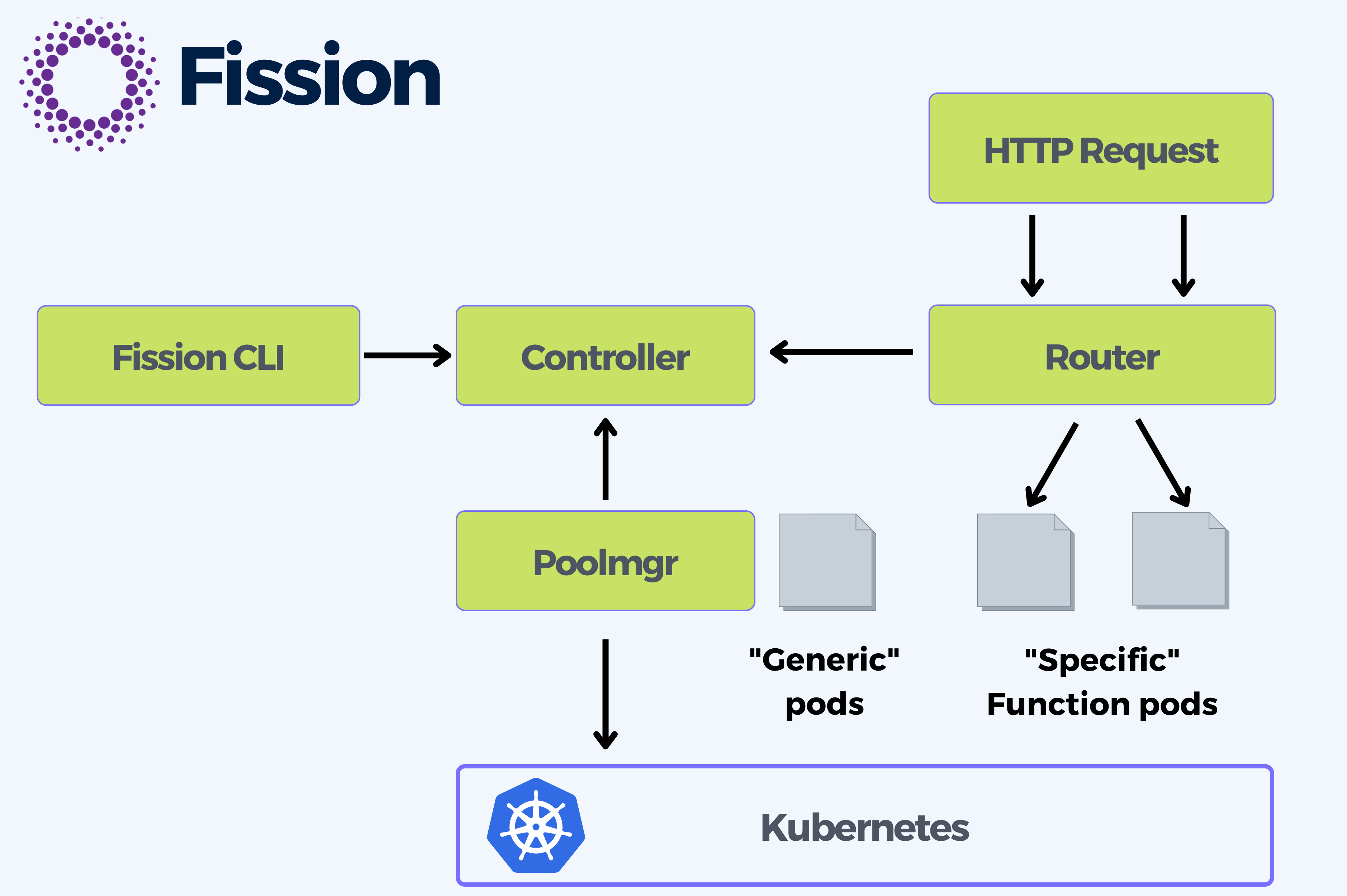

Fission is another open source serverless framework built by Platform9 along with other contributors. It allows developers to concentrate on coding and takes care of the plumbing with Kubernetes, facilitating productivity and high performance. It is written in Golang, offers runtime language flexibility, supports HTTP events and can be set-up easily.

It comes with three main concepts:

- Environment: This refers to a pre-built Docker image providing the runtime components such as a specific language installation along with a web server and dynamic loader used to wire a request or event into application code upon invocation.

- Function: This is the application code, following fissions structure.

- Trigger: This is something that causes a function to be executed, at the time of writing this can be a HTTP request, a time based trigger (like cron) or a message in a queue (either NATS, Kafka or Azure Storage Queue).

Fission provides a CLI which is distributed as a binary and is used to administer the fission platform. It allows creating/deleting/updating functions, environments and triggers as well as viewing invocation logs.

Pros:

- Choice of executors allowing for zero scale or keeping pods warm to avoid “cold start”

- Nice prometheus integration

Cons:

- Most of fission’s components can’t scale up (only the router currently) leaving some uncertainty about performance at real scale

Knative

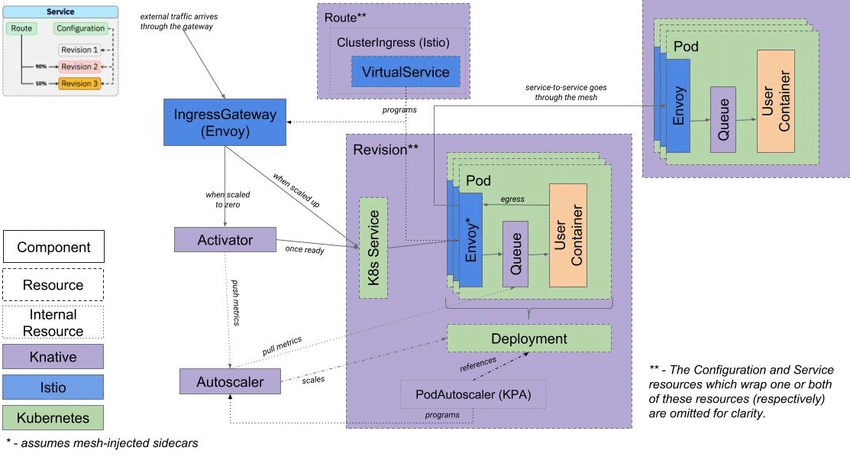

Knative is an open source initiative that allows you to develop and deploy container-based server applications that you can easily port between cloud providers. It was originally developed by Google with the help of RedHat and IBM. It is dependent on Istio, a project which heavily uses the lightweight Envoy proxy built at Lyft.

Knative can be enabled on Google Kubernetes Engine or it can be deployed to any cluster using Kubernetes Operator.

It combines Kubernetes with Serverless and allows you to build serverless solutions on top of Kubernetes. Knative supports autoscaling, in-cluster builds, and eventing framework for cloud-native applications on Kubernetes.

It consists of three high level components:

Building: provides tools for containers to be able to assemble in the cluster and to be launched from the source code. It works on the basis of existing Kubernetes primitives and also extends them.

Serving: runtime element that supports the deployment of serverless applications and features, automatic scaling from scratch, routing and network programming for service mesh (Istio) components, and snapshots of the deployed code and configurations.

Eventing: takes care of universal subscription, delivery, and event management as well as the creation of communication between loosely coupled architecture components. It deals with both producing and consuming events and allows you to scale with the load on the server.

Pros:

- Backed by Google, the author of Kubernetes

- Kubernetes Native

- Local event-based architecture with no restrictions imposed by public cloud services

- Automatic scaling

- High adoption rate and greater adoption potential

Cons:

- Provisioning Knative and its dependencies creates 110 CRDs, 24 deployments, 3 daemonsets and 51 containers in total, and that’s before deploying any functions!

- The minimum recommended cluster size is four n1-standard-4 nodes in GKE. This has a cost of just under $400 per month, which could be seen as a significant cost overhead, depending on the desired scale, team size & budget.Provisioning Knative and its dependencies creates 110 CRDs, 24 deployments, 3 daemonsets and 51 containers in total, and that’s before deploying any functions!

OpenWhisk

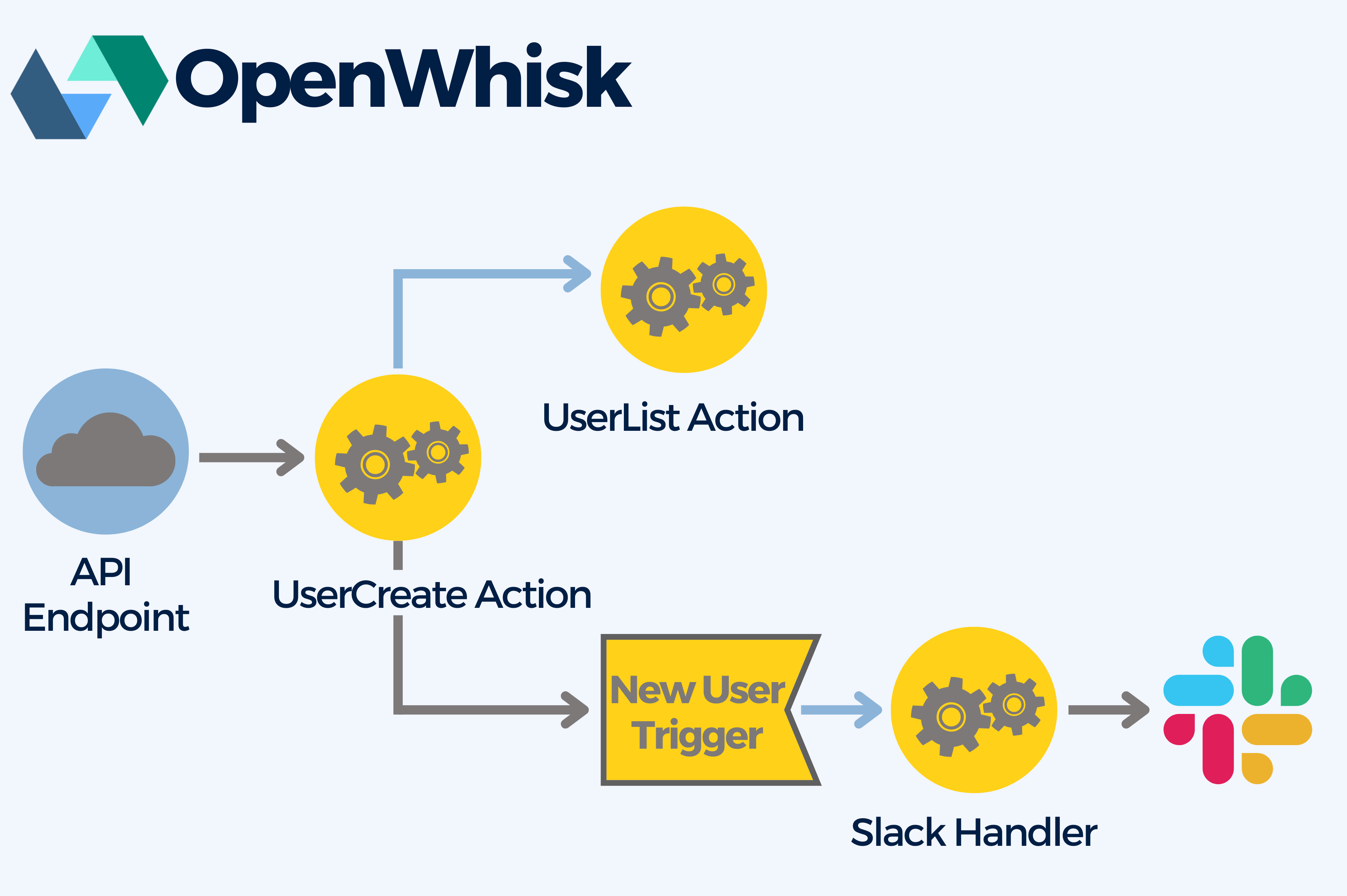

Apache OpenWhisk is an open source, function as a service (FaaS) platform for serverless computing. It uses cloud computing resources as services for building cloud applications and allows you to execute functions remotely while responding to events.

With OpenWhisk you can create serverless APIs from functions, composing functions into serverless workflows, and connecting events to functions using rules and triggers.

It supports multiple languages like Node.js, Swift, Java, PHP, Python, and additional languages via Docker containers.

OpenWhisk can be installed on a Kubernetes cluster using a managed Kubernetes cluster provisioned from a public cloud provider (e.g., AKS, EKS, IKS, GKE), or a cluster you manage yourself.

To run OpenWhisk locally you can use Docker with Kubernetes enabled. You will also need to use Helm as the package manager for the local Kubernetes cluster.

It introduces a number of concepts:

- Actions: function containing application code of the language you choose

- Triggers: group of events e.g. messages published to a topic or HTTP requests.

- Feeds: stream of events e.g. inbound webhook calls. It can be implemented using three patterns, Hooks, Polling and Connections, by creating an action (function) that accepts a set of defined parameters.

- Alarms: used to create periodic, time-based triggers.

- Rules: they associate one trigger with one action and inject the trigger event as an input.

Pros:

- Option for self-managing or consuming a hosted version on IBM Bluemix

- Opensource

- High-quality features

- High number of contributors.

Cons:

- Complex architecture and setup

- Written in Scala (all others are Golang based)

- Heavy tools (CouchDB, Kafka, Nginx, Redis, and Zookeeper) may cause difficulties for developers and operations.

FN Project

The Fn project is an open-source container-native and cloud agnostic serverless platform that you can run on any cloud or on-premise with a Docker engine. It’s easy to use, supports ANY programming languages, with deeper functionality is available for Java, Go, Python, and Ruby. It is extensible and performant.

When provisioning Fn on Kubernetes it depends upon cert-manager, ingress-nginx, MySQL and Redis. Functions are built into a Docker image, pushed to the registry and deployed using the fn CLI. HTTP is the only supported trigger type.

It is composed of the following components:

Fn Server: The core component of the platform which manages build, deployment and scaling for functions. Described as being multi-cloud and container-native.

Load balancer: The load balancer routes requests to functions and keeps track of “hot functions”, those that have their image pre-pulled to a node and are ready to receive requests.

Fn FDK’s: Function Development Kits allow developers to bootstrap functions in their chosen language by providing a data binding model for function inputs.

Fn Flow: Allows developers to orchestrate workflows for functions e.g. parallel, sequential and fan out style execution.

Pros:

Build and push of Docker images is abstracted away from the Developer, meaning little knowledge of Docker is required

Pluggable message queues and databases so you can Bring Your Own

A serverless provider is available for deploying and managing Fn function

Conclusion

The convergence of serverless technology with Kubernetes offers developers a powerful solution for building and deploying FaaS applications even outside of public Clouds.

Serverless architecture, with its focus on abstracting away infrastructure concerns, allows developers to concentrate solely on writing code, resulting in increased productivity and faster development cycles.

Kubernetes, on the other hand, provides the scalability, portability, and management capabilities required to run containerized applications efficiently.

By leveraging serverless frameworks specifically designed for Kubernetes, such as OpenFaas, Fission, Knative, OpenWhisk or the Fn Project, developers can harness the benefits of serverless without being bound to AWS or GCP of other clouds.

These frameworks enable the seamless deployment and scaling of serverless functions within a Kubernetes cluster, eliminating the need for vendor lock-in and offering greater flexibility in running serverless workloads.

Ready to leverage the power of serverless in Kubernetes for your application development needs? Our team of experts is here to help! Get in touch with us!