Did you know.. Linkerd is the world's lightest, fastest service mesh?

First things first: What is a service Mesh?

A service mesh is a tool that helps you control how different parts of one application (services) share data with one another. It is a distinct infrastructure layer built right into an app, that adds observability, security, and reliability features to the applications, inserting these features at the platform layer as opposed to the application layer. In other words, it adds features to the application without any source code changes and facilitates the communication.

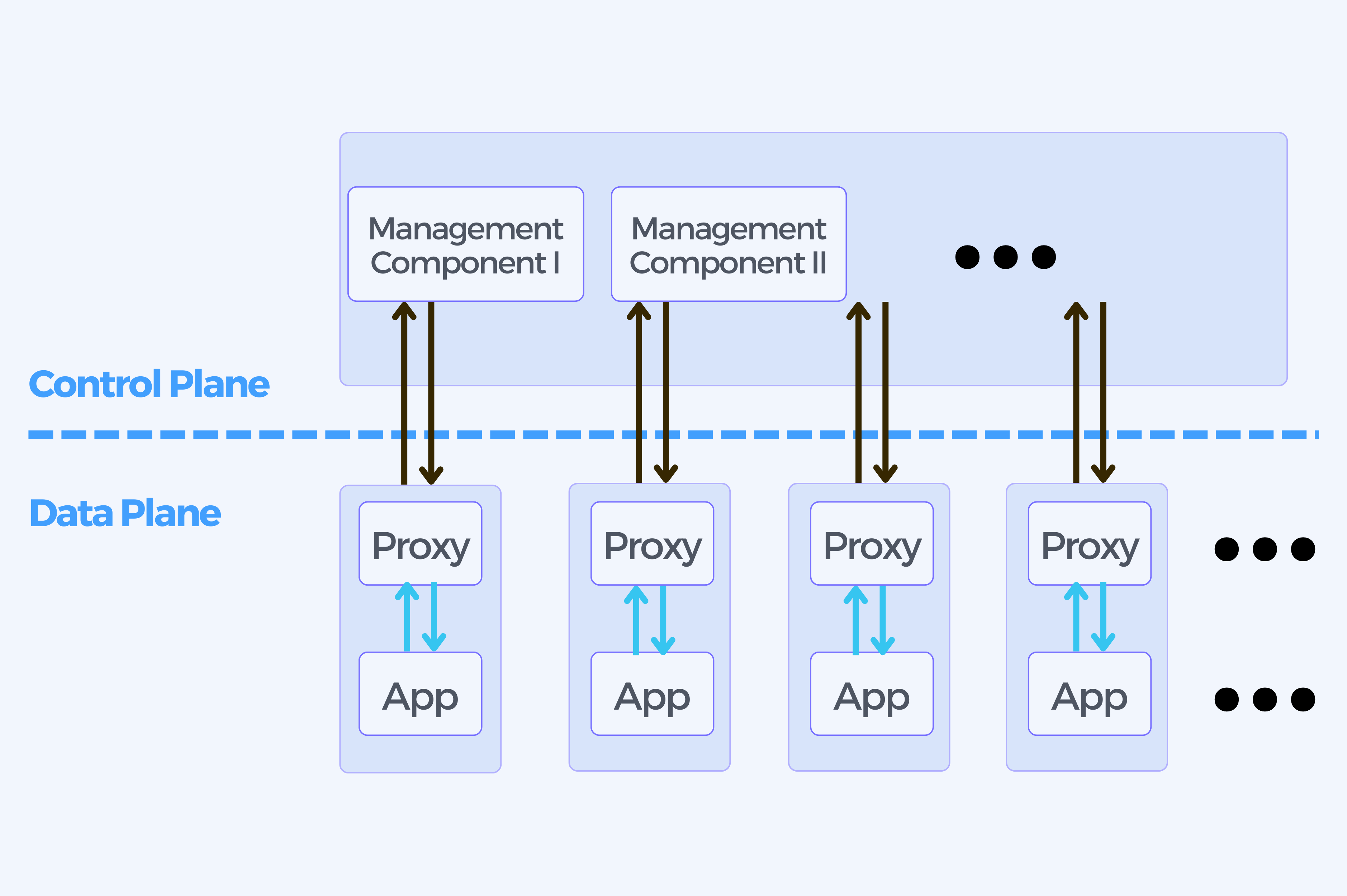

The service mesh is composed of management processes (control plane) as well as a scalable set of network proxies (data plane) deployed next to your application code or services (sidecar container).

The data plane is controlled as a whole by its control plane.

The proxies control the communication between services and also allows the service mesh features to be introduced.

The control plane manages the behavior of the proxies, and provides an API for the operator to manipulate and measure the mesh as a whole.

Why use a service mesh?

Each service performs a specific business function and in order to give users what they want, services need to communicate with each other.

In some cases one service will have to request data from several other services and it can happen that services receive too many requests at once. A service mesh routes requests from one service to another, making sure all the elements work together seamlessly.

What are the proxies?

They are Layer 7-aware TCP proxies (similar to haproxy and NGINX). Linkerd uses a Rust “micro-proxy” (Linkerd-proxy) that was built specifically for the service mesh. The choice of proxy is an implementation detail and varies, so other meshes may use different proxies. For instance, Istio service mesh relies on Envoy proxy.

The data plane:

The proxy manages incoming and outgoing calls, meaning they call to and from the services. Those proxies focus on traffic between the services and that differentiates service mesh proxies from API gateways or ingress proxies, which focus on calls from the outside world into the cluster as a whole.

The control plane:

This set of components provides the data plane with any machinery it might need in order to function in a coordinated fashion, this includes service discovery, TLS certificate issuing, metrics aggregation, etc.

The data plane calls the control plane and reports its state.

The control plane offers an API to allow the user to change and inspect the behavior of the data plane as a whole.

The control plane is composed of a few elements, including:

- Small Prometheus instance that aggregates metrics data from the proxies

- destination (service discovery)

- identity (certificate authority)

- public-api (web and CLI endpoints)

The data plane consists of a single linkerd-proxy sidecar placed next to an application instance.

After deploying Linkerd, you usually end up with three replicas of each control plane component and hundreds or thousands of data plane proxies depending on the number of applications you operate.

What is Linkerd?

Linkerd is a service mesh developed mainly for Kubernetes but can be deployed into a variety of container orchestrators and frameworks. It is fully open source, licensed under Apache v2, and is a CNCF (Cloud Native Computing Foundation) graduated project.

Linkerd is said to be the lightest and fastest service mesh as of now. It makes running Cloud-Native services easier, more reliable, safer, and more visible by providing observability for all Microservices running in the cluster. All of this without requiring any Microservices source code changes. It has the capacity to handle high traffic applications and is preferred by many organizations in their production environments such as PayPal, Expedia, Hashicorp an more.

Linkerd includes three parts:

- UI

- Data plane

- Control plane

CLI

This CLI is usually run outside of the cluster (e.g. on your laptop) and is used to interact with the Linkerd.

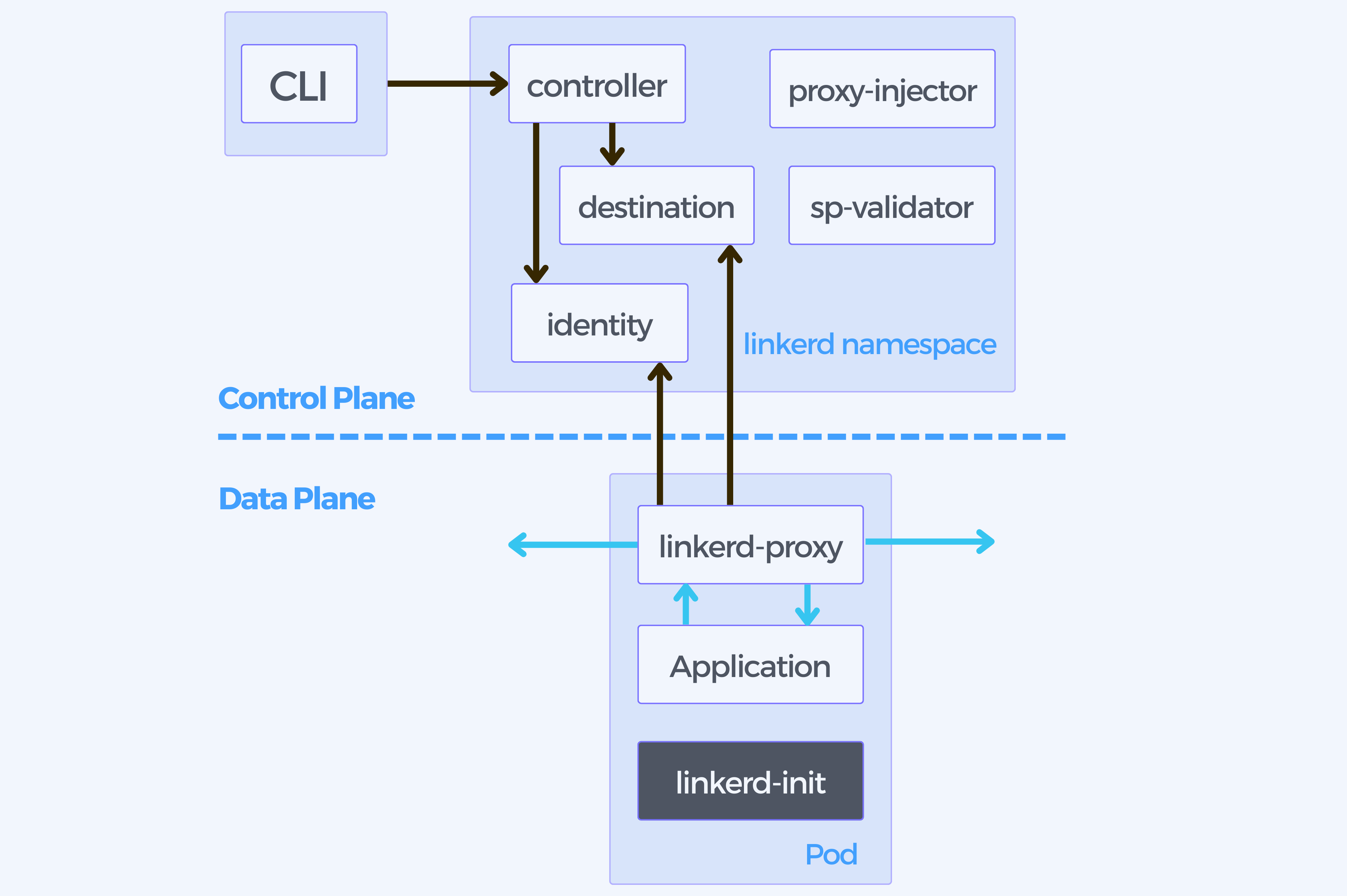

The control plane

It is a set of services that run in a dedicated Kubernetes namespace (linkerd by default) and takes control over Linkerd as a whole. It entails the following components:.

Destination service

The proxies in the data plane use the destination service to monitor their behavior. It can be used to fetch

- service discovery information (i.e. where to send a particular request and the TLS identity expected on the other end)

- policy information about which types of requests are allowed

- service profile information used to inform per-route metrics, retries, and timeouts.

The identity service

The identity service acts as a TLS Certificate Authority that accepts CSRs from proxies and returns signed certificates. These certificates are issued at the proxy initialization time and are used for proxy-to-proxy connections to implement mTLS.

The proxy injector

This is a Kubernetes admission controller. Every time a pod is created the proxy injector receives a webhook request and it inspects resources for a Linkerd-specific annotation (linkerd.io/inject: enabled). If there is an annotation, the injector mutates the pod’s specification and the proxy-init and linkerd-proxy containers are automatically added to the pod, along with the relevant start-time configuration.

The data plane

It consists of transparent ultralight micro-proxies that run “next” to each service instance in the same pod as the application containers. This pattern is known as a sidecar container.

These proxies can handle all TCP traffic to and from the service automatically, and communicate with the control plane for configuration.

Proxy

The Linkerd2-proxy is an ultralight, transparent micro-proxy written in Rust. This proxy is designed specifically for the service mesh use case (not designed to be used as a general-purpose proxy like HaProxy).

Features include:

- Transparent, zero-config proxying for HTTP, HTTP/2, and arbitrary TCP protocols.

- Automatic Prometheus metrics export for HTTP and TCP traffic.

- Transparent, zero-config WebSocket proxying.

- Automatic, latency-aware, layer-7 load balancing.

- Automatic layer-4 load balancing for non-HTTP traffic.

- Automatic TLS.

- An on-demand diagnostic tap API.

- And lots more.

The proxy supports service discovery via DNS and the destination gRPC API.

Linkerd init container

This container is added to each meshed pod as a Kubernetes init container that runs before any other containers are started. It uses iptables to route all TCP traffic to and from the pod through the proxy.

Conclusion

The advantages of using a service mesh for modern micro-service-based applications are numerous. Linkerd’s lightweight design and full compatibility with Kubernetes make it one of the best options for cloud-native services today.

Integrating Linkerd into infrastructure helps organizations to navigate the complexities of micro-service communication and isolation without the need for development teams to change their applications.

Don’t miss out on the opportunity to unlock the full potential of cloud-native architectures. Reach out now and we will help you take the first steps towards transforming your applications. Click here to get in touch!