OpenStack Use Cases and Data Center Infrastructure

This is the third post in a series of blog articles about OpenStack. In the first installment, we explored the history and significance of OpenStack in the realm of cloud computing. The second post delved into its microservice architecture, detailing how its components work together to deliver scalable and flexible cloud solutions.

Today, we will first delve into two types of Database infrastructure: Hyper-Converged vs. Traditional (non-converged) Infrastructure followed by real-world OpenStack use cases and case studies, highlighting how organizations have successfully implemented OpenStack, the benefits they’ve experienced, and the challenges they’ve faced. Additionally, we’ll see how OpenStack can be used to build private, public, and even edge clouds.

Comparing Traditional (Non-Converged) and Hyperconverged Infrastructure (HCI)

Traditionally, IT infrastructure has been built in a non-converged manner, where storage, compute, and networking components were procured, managed, and scaled independently. However, the advent of hyper-converged infrastructure (HCI) has introduced a new paradigm, merging these components into a single, cohesive unit. Let’s explore the differences between these two approaches and understand how each fits into the modern IT landscape.

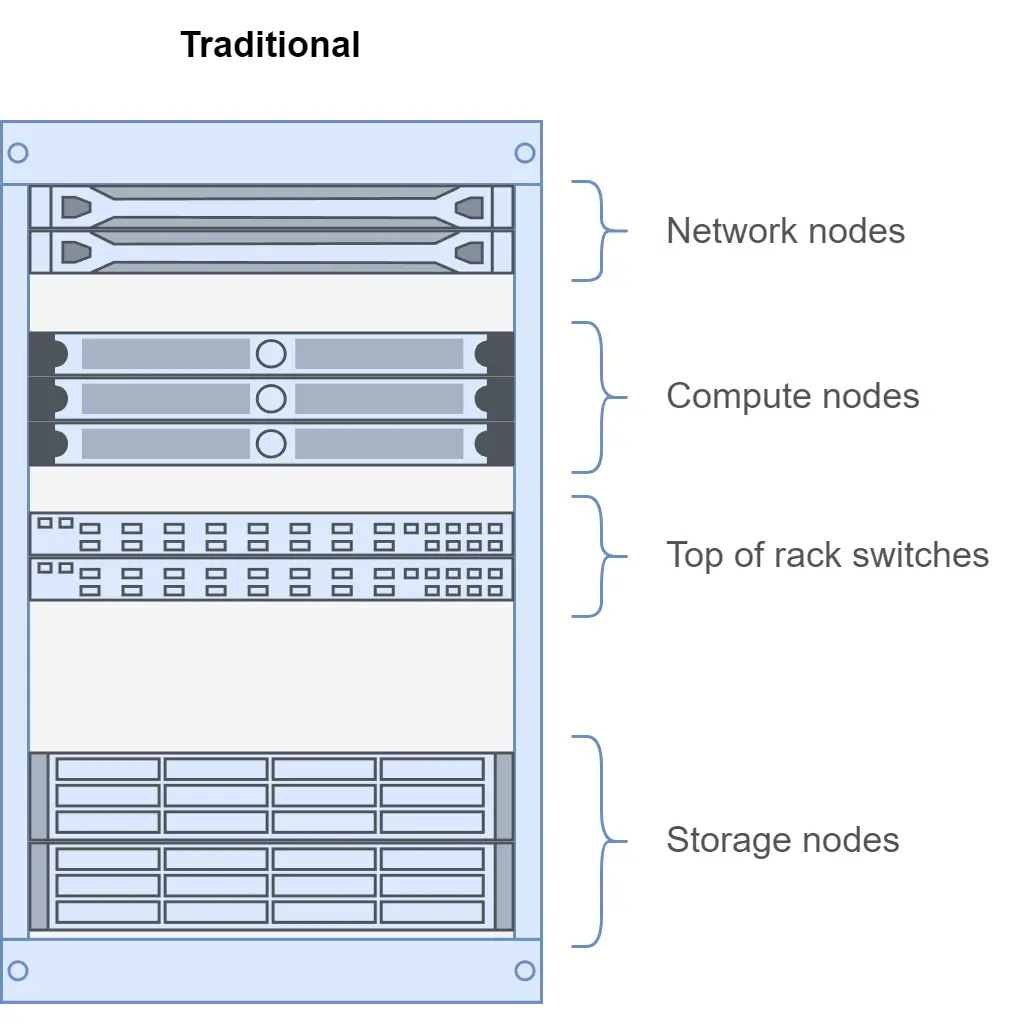

Non-converged cloud infrastructure refers to an environment where different types of hardware and software components are used separately to support various functions within the cloud environment. In a non-converged infrastructure, computing, storage, networking, and virtualization resources are typically managed and provisioned independently.

Type of components in a non-converged cloud infrastructure:

- Computing:

This includes servers, virtual machines (VMs), and containers where applications and workloads run. In a non-converged setup, computing resources might be managed separately from storage and networking resources. - Storage:

Non-converged storage involves storage systems like network-attached storage (NAS), storage area networks (SANs) and variations of software defined storage. These storage systems may operate independently of the computing resources and are managed separately and typically placed next to compute nodes (e.g. in the same rack). - Networking:

Networking components such as routers, switches, and firewalls handle the communication between different parts of the infrastructure and external networks including the Internet. In a non-converged setup, networking resources may not be tightly integrated with computing and storage resources.

The key characteristic of non-converged infrastructure is its lack of integration and unified management across computing, storage, networking, and virtualization resources. This sometimes leads to challenges in scalability, resource optimization, and management complexity compared to converged or hyper-converged infrastructure where these components are tightly integrated and managed as a single system.

The appearance of a data center rack in a non-converged cloud infrastructure can vary depending on the specific hardware and configuration used by the organization. However, a general diagram can look like this:

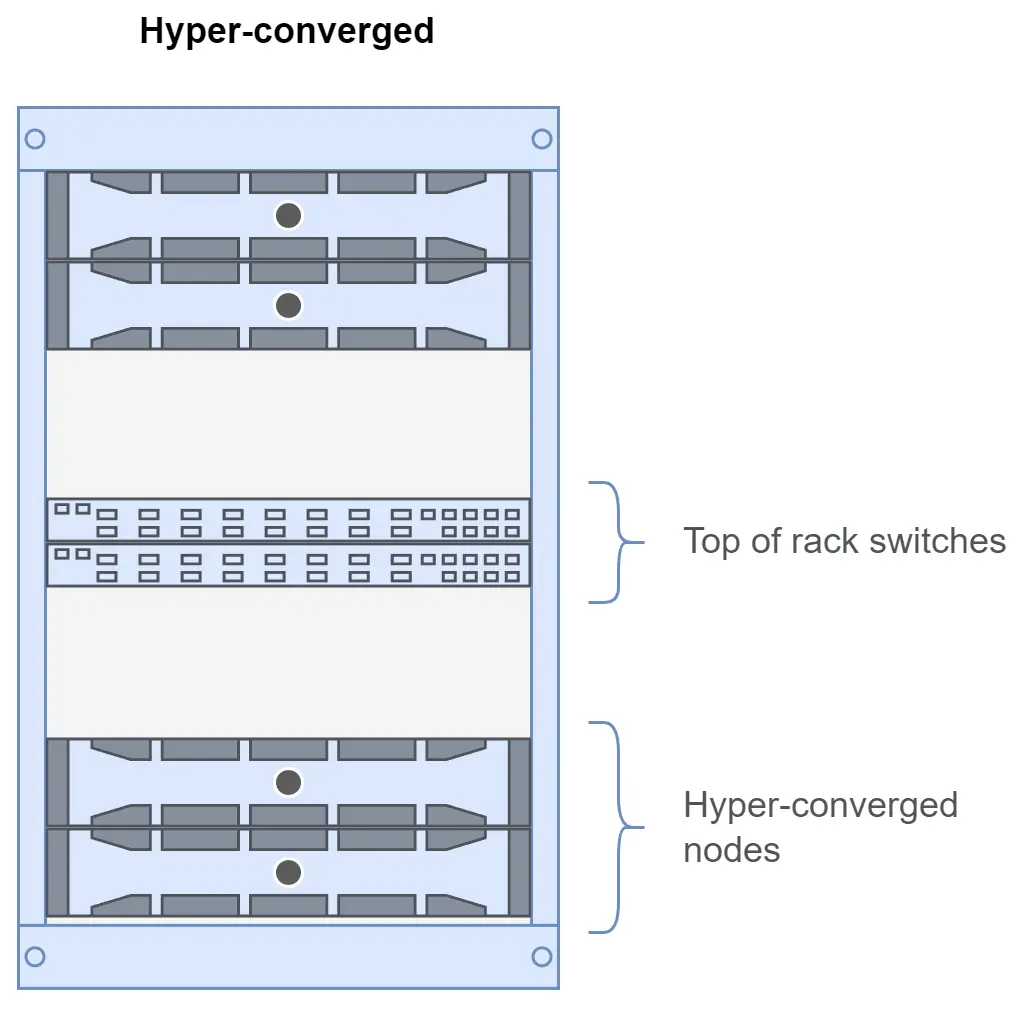

In a hyper-converged infrastructure (HCI), computing, storage, networking, and virtualization are tightly integrated into a single, software-defined platform. This integration is designed to streamline deployment, management, and scalability while reducing complexity and costs.

Here are the key components and characteristics of hyper-converged cloud infrastructure:

- Hardware Convergence:

Unlike traditional infrastructure where compute, storage, and networking are separate components, HCI combines these elements into a single hardware platform. Each node in the hyper-converged cluster typically includes CPU, RAM, storage drives (e.g., SSDs, HDDs, NVMEs), and networking interfaces that can take over the function of a firewall. - High Availability and Resilience:

Hyper-converged infrastructure is designed for high availability (HA) and resilience. Data redundancy and fault tolerance mechanisms are built into the platform on both software and hardware layers to ensure data integrity and minimize downtime in case of hardware failures or maintenance events. As example, the storage will be typically replicated 3 times across different drives located in 3 different hosts. Each host will come with two network cards and so on. - Storage Virtualization and Data:

Storage is virtualized and pooled together across all nodes in the hyper-converged cluster. Data services such as deduplication, compression, and data replication are often built into the software defined storage layer to optimize performance, efficiency, and data protection. Caching layer can be added to further improve performance. - Scale-out Architecture:

Hyper-converged infrastructure is designed to scale out by adding more nodes to the cluster. As new nodes are added, resources including computing power, storage capacity, and network bandwidth are automatically aggregated and made available to the entire infrastructure. That is typically achieved with a so-called Leaf-Spine architecture where additional nodes plugged into Leaf switches are installed and each Leaf switch connects to all Spine switches above it.

General rack installation diagram:

Both infrastructure models have their place, with traditional setups offering customization and flexibility, while HCI provides simplicity and efficiency. The choice depends on the organization’s specific needs, but as we can see the HCI is more popular today due to greater flexibility and better price-efficiency.

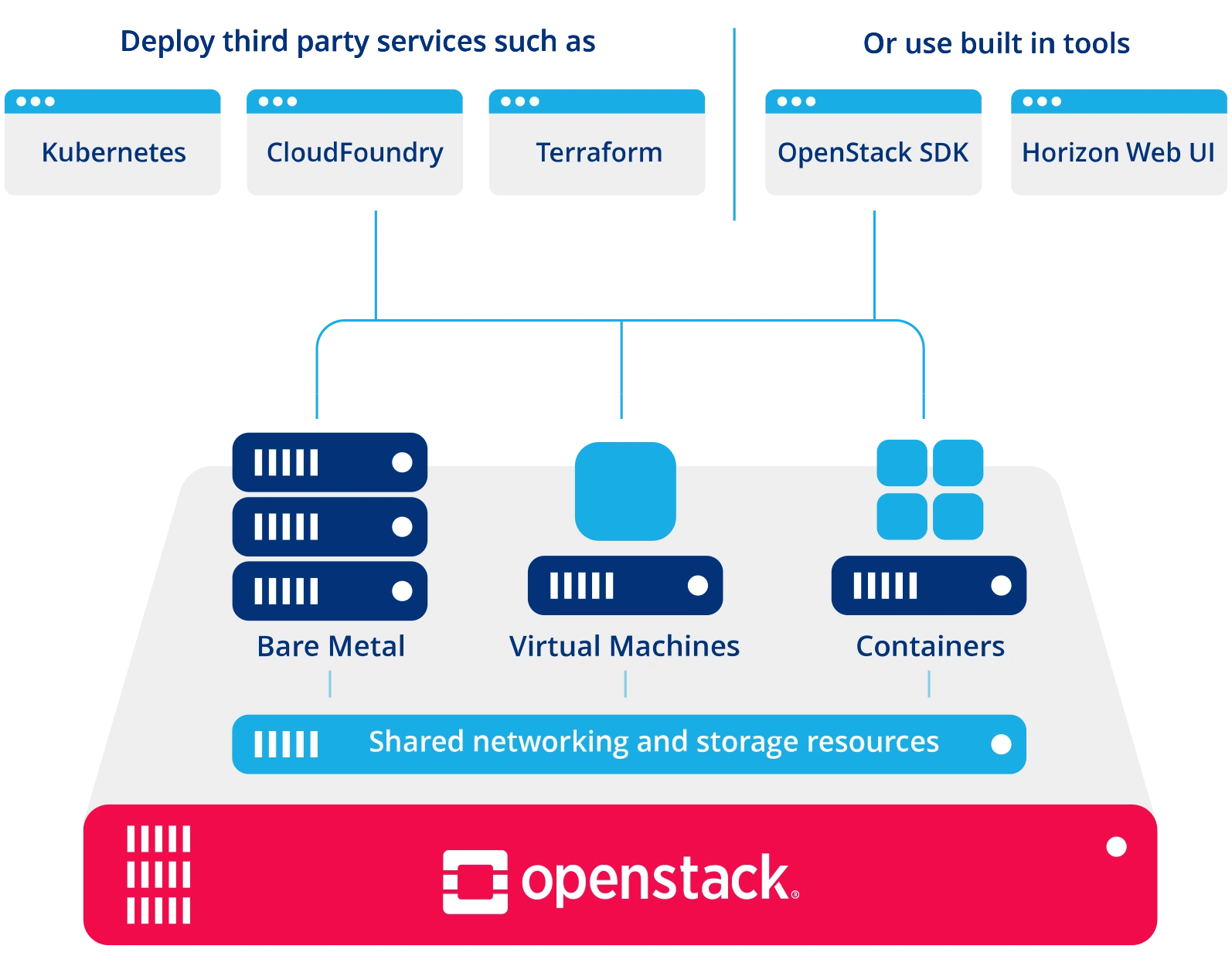

Shifting back to the topic of OpenStack, it’s flexibility allows it to be utilized within both HCI and traditional infrastructure environments. Let’s explore the advantages and challenges of deploying OpenStack in these two infrastructure paradigms.

OpenStack: Enabling Modern Cloud Infrastructures

Who is using OpenStack?

Organizations across various industries have leveraged OpenStack to enhance their IT capabilities and manage their hyper-converged infrastructures. Let’s explore some real-world use cases to understand the benefits and challenges of implementing OpenStack at various organizations around the world.

OpenStack implementation examples

1. Private Cloud

Example: Volkswagen Group

Volkswagen Group is one of the largest automobile manufacturers in the world and has turned to OpenStack to build a private cloud that would enable rapid development and deployment of applications. By doing so, Volkswagen was able to create a scalable and flexible cloud environment that supported their diverse IT needs.

Benefits:

- Enhanced control over data and infrastructure

- Improved security and compliance

- Huge cost savings compared to public cloud alternatives

- Greater agility in deploying new applications and services

Challenges:

- Integrating OpenStack with existing legacy systems

- Ensuring high availability and disaster recovery

Example: Walmart

Walmart, the global retail giant, adopted OpenStack already back in 2014 to enhance its e-commerce operations and support its digital transformation initiatives. By leveraging OpenStack, Walmart was able to build a private cloud that provided the scalability and agility needed to handle peak shopping periods and deliver a seamless customer experience.

Benefits:

- Improved resource utilization

- Enhanced scalability

- Reduced operational costs

- Improved scalability to handle fluctuating demand

- Enhanced agility in deploying new applications and services

Challenges:

- Initial setup complexity

- The need for skilled personnel to manage the environment

- Integrating OpenStack with existing IT systems

- Ensuring high availability and performance during peak load

Example: CERN – Managing Big Data for Scientific Research

The European Organization for Nuclear Research was among very early OpenStack adopters. CERN uses OpenStack to manage the vast amounts of data generated by the Large Hadron Collider. By integrating OpenStack, CERN created a scalable and flexible cloud infrastructure that supports its intensive computational and data storage needs at a large scale with over 300.000 cores and PBs of storage.

Benefits:

- Scalable infrastructure to handle petabytes of data

- Flexibility to support various research projects and workloads

- Cost savings through the use of open-source technology

Challenges:

- Ensuring data security and privacy

- Managing the complexity of very large scale deployments

2. Public Cloud

Example: OVHcloud

OVHcloud, a leading global cloud provider with over 40 data centers, utilizes OpenStack to deliver public cloud services to a broad range of customers. By adopting OpenStack, OVHcloud was able to offer a highly flexible and cost-effective cloud platform that supports a wide variety of workloads and customer requirements.

Benefits:

- Scalability to accommodate a growing customer base

- Flexibility to support diverse workloads and applications

- Cost efficiency due to open-source nature

- Ability to provide customers with robust and customizable cloud services

Challenges:

- Managing the complexities of a multi-tenant environment

- Ensuring consistent performance and reliability across the platform

Example: Open Telekom Cloud

Open Telekom Cloud, operated by Deutsche Telekom, also leverages OpenStack to provide public cloud services. This implementation emphasizes high security standards and data sovereignty, catering to European customers with stringent data protection requirements.

Benefits:

- Flexibility and scalability

- Provisioning of large range of services and applications to meet diverse customer needs

Challenges:

- Maintaining high availability and performance in a multi-tenant environment

- Ensuring seamless integration with other cloud services

3. Edge Cloud

Example: Verizon

Verizon has leveraged OpenStack to build edge cloud solutions that bring computing resources closer to end-users and devices. This approach reduces latency and improves performance for applications that require real-time processing, such as IoT and 5G services.

Benefits:

- Reduced latency for real-time applications

- Enhanced performance for end-users

- Flexibility to deploy resources at the network edge

- Scalability to support the growing demands of IoT and 5G

Challenges:

- Managing distributed infrastructure

- Ensuring security and data integrity across edge locations

- Balancing resource allocation between central and edge cloud environments

4. Implementing HCI environments

When integrated with HCI, OpenStack enhances the benefits of hyper-convergence by leveraging its microservice and scalable architecture to provide a comprehensive solution for modern IT environments. With OpenStack you can scale components and services individually and add capacity where and when you need it. A wide range of drivers supported by the OpenStack ecosystem allows almost any hardware to be integrated into a cloud.

Benefits

- Simplified Management and Automation

- Enhanced Scalability

- Flexibility and Interoperability

- Cost Efficiency

- Enhanced Security and Compliance

Challenges

- Complexity of initial Deployment and Configuration

- Need for OpenStack expertise

- Integration with legacy environments

OpenStack is pivotal in enabling modern cloud environments. It supports a wide range of use cases that cater to the needs of private, public, and edge cloud deployments. This integration of OpenStack’s versatility showcases its capability to address various cloud requirements, from centralized data centers to decentralized edge locations.

Let’s take a look at how to leverage OpenStack capabilities to build and manage tailored cloud solutions that meet your specific needs.

Building Private, Public, and Edge Clouds with OpenStack

Private Cloud:

To build a private cloud with OpenStack, organizations can deploy the platform on their own hardware within their data centers. This approach provides complete control over the infrastructure, ensuring security and compliance while offering the flexibility to scale as needed. Key considerations include selecting the appropriate OpenStack components, ensuring network configuration, and planning for high availability and disaster recovery.

Public Cloud:

For public cloud deployments, service providers can leverage OpenStack to offer cloud services to external customers. This involves setting up a multi-tenant environment that can handle diverse workloads and provide robust security and performance. Key considerations include managing strict tenant isolation, monitoring resource usage, and ensuring service level agreements (SLAs) are met.

Edge Cloud:

Building an edge cloud with OpenStack involves deploying resources closer to the end-users and devices to reduce latency and improve performance. This requires a distributed infrastructure that can support real-time processing and handle the unique challenges of edge environments. Key considerations include network configuration, data security, and balancing resources between multiple edge locations.

Conclusion

OpenStack has proven to be a versatile and powerful platform for building all kinds of clouds, from private and public to cutting-edge deployments. Organizations like Volkswagen, CERN, Walmart, OVH, Deutsche Telekom and many others have successfully harnessed OpenStack to meet their unique requirements, showcasing the platform’s maturity, flexibility and high cost-effectiveness.

If you’re considering implementing OpenStack or need assistance with optimizing your OpenStack cloud, don’t hesitate to reach out to us. As a Silver member and long-time contributor of OpenInfra foundation we know what it takes to run OpenStack in different deployment scenarios and at a different scale.

Unlock the full potential of OpenStack’s microservice architecture and discover the advantages it can bring to your organization.