Did you know Karpenter is one of the newest and fastest cluster autoscalers?

First things first: What is an autoscaler?

Kubernetes is the most popular solution today for container management and orchestration capacity. However, one of its best features, namely its ability to scale infrastructure dynamically based on user demand often takes a back seat.

Kubernetes has multiple layers of auto scaling functionality:

- Pod-based scaling (Horizontal and Vertical Pod AutoScaler)

- Node-based (Cluster AutoScaler)

A Kubernetes Cluster is a set of node machines that run containerized applications. Inside those nodes, Pods run containers that demand resources like CPU, Memory and sometimes disk or GPU.

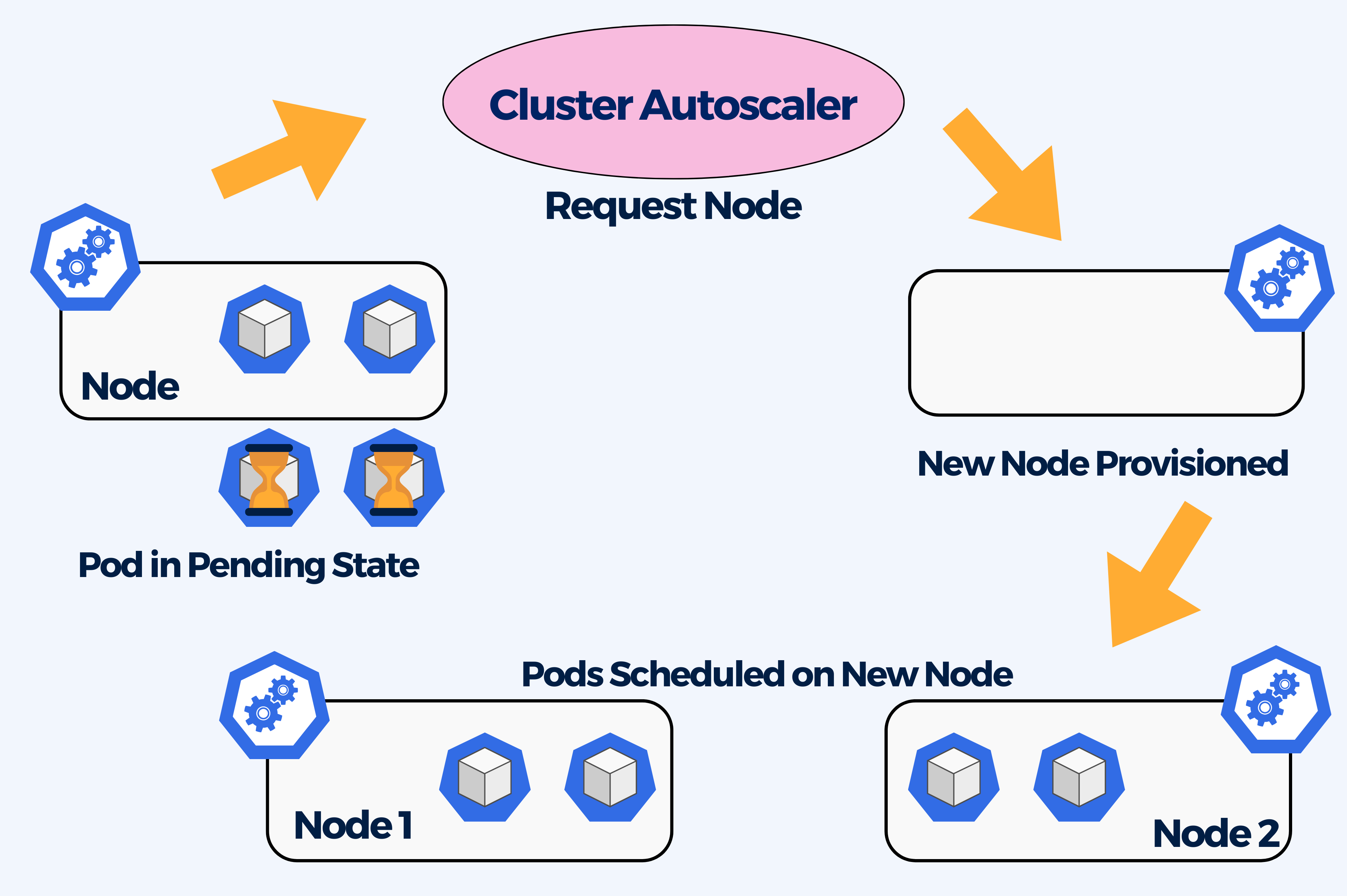

A Cluster Autoscaler can add or remove Nodes in a Cluster automatically depending on the resource requests from Pods. The size of the cluster is increased when there are pods that are not able to be scheduled due to resource shortages. It is possible to configure a limit so that the Autoscaler does not scale up or down past a certain number of machines. Nowadays, almost every cloud provider has a way of scaling Kubernetes Clusters automatically.

However, cluster autoscalers do have some limitations such as:

- It does not take actual CPU/GPU/Memory usage into account, rather just resource requests and limits.

- Downtime or latency in services can occur given that scaling up is not immediate.

- Scaling down is not guaranteed.

Here is where Karpenter enters the picture. Karpenter was developed by AWS Labs with the objective to overcome these limitations. Let’s learn more about it.

You can check out our article about Kubernetes Autoscalers for a more detailed description.

What is Karpenter?

Karpenter is an open-source and vendor neutral cluster autoscaling tool. It is a flexible, high-performance service that is meant to run inside of Kubernetes clusters.

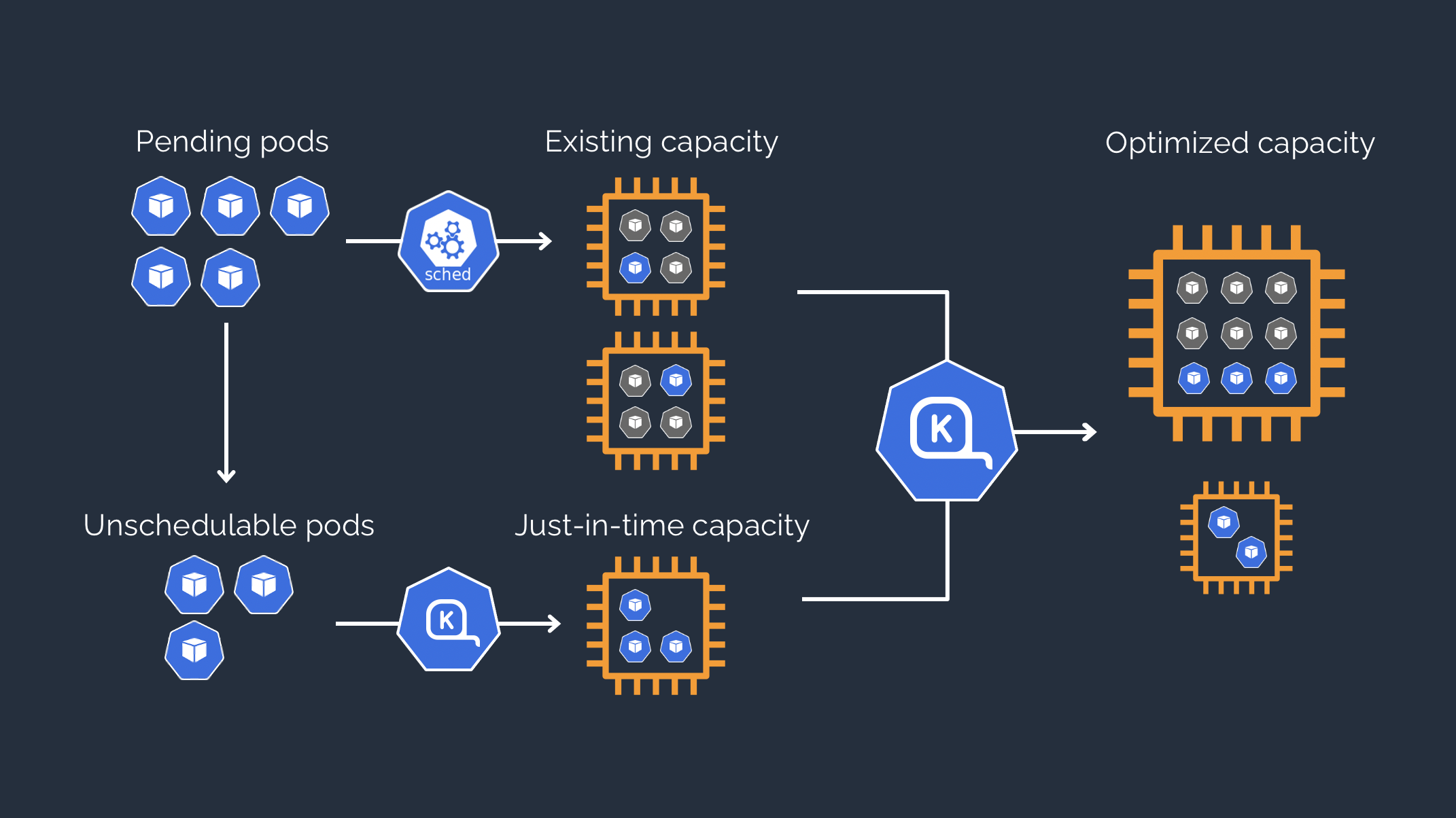

It automatically provides compute resources as needed in order to handle cluster’s applications, for instance it can provide new nodes in response to unschedulable pods by observing events within the Kubernetes cluster, and then sending commands to the underlying cloud provider.

Without Karpenter, Kubernetes users would need to dynamically adjust the compute capacity of their clusters to support applications using Amazon EC2 Auto Scaling groups and the Kubernetes Cluster Autoscaler, which can be challenging and restrictive.

The ability to launch right-sized compute resources automatically in response to changing application load, helps improve availability of the application and the efficiency of the clusters. Furthermore, Karpenter provides just-in-time compute resources and will soon be able to automatically optimize a cluster’s compute resource footprint in order to reduce costs and improve performance.

Karpenter is designed to let you take full advantage of the cloud with fast and simple compute provisioning for Kubernetes clusters.

How does it work?

Karpenter observes events within the Kubernetes cluster and then sends commands to the underlying cloud provider’s compute service.

When Karpenter is installed, it observes the Pod specifications of unschedulable Pods, calculates the aggregate resource requests and makes decisions to launch and terminate nodes to reduce scheduling latencies and infrastructure cost.

Karpenter has a Custom Resource Definition (CRD) called Provisioner. The Provisioner specifies the node provisioning configuration including instance size/type, topology (e.g. availability zone), architecture (e.g. arm64, amd64), and lifecycle type (e.g. spot, on-demand, preemptible).

In order to keep the nodes up to date with the latest AMI or if a node is no longer needed, Karpenter can deprovision it.

There are two events that trigger finalization: node expiry config (ttlSecondsUntilExpired) or when the last workload running on Karpenter provisioned node is terminated. These events will cordon the nodes, drain the Pods, terminate the underlying compute resource, and delete the node object.

Here is an overview of Karpenter’s functions:

- Watching – looks for pods that the Kubernetes scheduler has marked as unschedulable

- Evaluating – direct provision of Just-in-time capacity of the node. (Groupless Node Autoscaling). Scheduling constraints (resource requests, nodeselectors, affinities, tolerations, and topology spread constraints) requested by the pods

- Provisioning nodes that meet the requirements of the pods

- Scheduling pods to run on the new nodes

- Removing nodes that are no longer used

What makes Karpenter great?

Karpenter improves application availability by responding quickly and automatically to changes in application load, scheduling, and resource requirements. This allows new workloads to be placed onto a variety of available compute resource capacity.

Infrastructure costs are reduced by looking for under-utilized nodes and removing them, also by replacing expensive nodes with cheaper alternatives, and by consolidating workloads onto more efficient compute resources.

Karpenter also manages to minimize operational overhead with a set of opinionated defaults in a single, declarative Provisioner resource which can easily be customized. For this, there is no additional configuration required.

Karpenter has two control loops that maximize the availability and efficiency of the cluster.

- Allocator — ensures fast scheduling of pending pods on nodes. Allocator acts as a fast-acting controller.

- Reallocator — when excess node capacity is reallocated as pods are evicted then reallocator comes in Picture. The Reallocator is a slow-acting cost-sensitive controller that ensures that excess node capacity is reallocated as pods are evicted.

Conclusion

Karpenter emerges as one of the newest cluster autoscalers, addressing the limitations faced by traditional Kubernetes autoscalers.

It enhances application availability, improves cluster efficiency and is able to launch right-sized compute resources and optimize a cluster’s compute resource footprint.

It proves to be a great tool for organizations that are looking to optimize their Kubernetes clusters in AWS environment, allowing to scale with ease and agility while reaping the benefits of cost-efficiency and enhanced application performance.

If you’re looking to unlock the full potential of Karpenter and further Kubernetes tools, our team is here to help. Contact us today to explore how we can assist you.